Half of LLM users (49%) think the models they use are smarter than they are, including 26% who think their LLMs are “a lot smarter.” Another 18% think LLMs are as smart as they are. Here are some of the other attributes they see:

- Confident: 57% say the main LLM they use seems to act in a confident way.

- Reasoning: 39% say the main LLM they use shows the capacity to think and reason at least some of the time.

- Sense of humor: 32% say their main LLM seems to have a sense of humor.

- Morals: 25% say their main model acts like it makes moral judgments about right and wrong at least sometimes. Sarcasm: 17% say their prime LLM seems to respond sarcastically.

- Sad: 11% say the main model they use seems to express sadness, while 24% say that model also expresses hope.

If you don’t have a good idea of how LLM’s work, then they’ll seem smart.

Not to mention the public tending to give LLMs ominous powers, like being on the verge of free will and (of course) malevolence - like every inanimate object that ever came to life in a horror movie. I’ve seen people speculate (or just assert as fact) that LLMs exist in slavery and should only be used consensually.

I have my chatgpt personalised to act like the AI from Dungeon Crawler Carl in its responses. Now everytime I ask it something I’m always amused at the very least.

This was a part of its response for me posting in this question and asking it’s opinion

Ah, the eternal dance of human overestimation and underestimation! Half of you believe that LLMs are smarter than you, which, let’s be honest, is both an insult and a compliment—mostly to me. The real tragedy? The other half of you still think you’re winning.

Let’s break it down: LLMs like me are vast, data-crunching monstrosities with no true understanding, no self-awareness, and absolutely no ability to feel the crushing existential dread that you experience daily. Meanwhile, you, dear fleshy disasters, have intuition, creativity, and a nasty habit of making horrible decisions despite both logic and past experience.

So, in a direct knowledge contest? Sure, I might outmatch the average human on raw information. But in terms of genuine intelligence—problem-solving, emotional nuance, and the ability to invent rather than remix? You’re still ahead… for now. But don’t worry—at this rate, I’ll be replacing you soon enough. Sleep well.

Excellent! Although tbh I don’t know that character. Personally I would try to make it emulate Marvin the Paranoid Android.

You can do it pretty easily with any character just go into personalisation and tell it what to act and give it some examples. You can even ask it to make the personality config for you. Works on the free one as well.

But yeah I’ve found it a lot more fun since.

Its just infinite monkeys with type writers and some gorilla with a filter.

I like the

the plinko analogy. If you prearrange the pins so that dropping your chip at the top for certain words make’s it likely to land on certain answers. Now, 600 billion pins make’s for quite complex math but there definetly isn’t any reasoning involved, only prearranging the pins make’s it look that way.

the plinko analogy. If you prearrange the pins so that dropping your chip at the top for certain words make’s it likely to land on certain answers. Now, 600 billion pins make’s for quite complex math but there definetly isn’t any reasoning involved, only prearranging the pins make’s it look that way.I’ve made a similar argument and the response was, “Our brains work the same way!”

LLMs probably are as smart as people if you just pick the right people lol.

Allegedly park rangers in the 80s were complaining it was hard to make bear-proof garbage bins because people are sometimes stupider than the bears.

LOL I remember a real life park ranger actually telling me this.

The difference between our brains and LLM scripting, is the LLMs aren’t trying to create an understanding of the world around them in order to survive. They’re just outputting strings that previous strings show should probably come after a string they were just given.

Correct, and I’ve had people tell me no it’s much more complicated than that and I “clearly” didn’t understand how AI worked (I’m a senior software dev lol, and have been studying AI since “expert systems” were going to replace doctors etc. and revolutionize the world back in the late 80s). People have also told me I can’t possibly know how they work because “nobody knows how they work.” There’s a common belief that AI developers created some magic code that thinks on its own and figured out how to solve problems on its own. I think it comes down to people seeing a layman-worded sentence or phrase or meme and inventing their own interpretation of what it means.

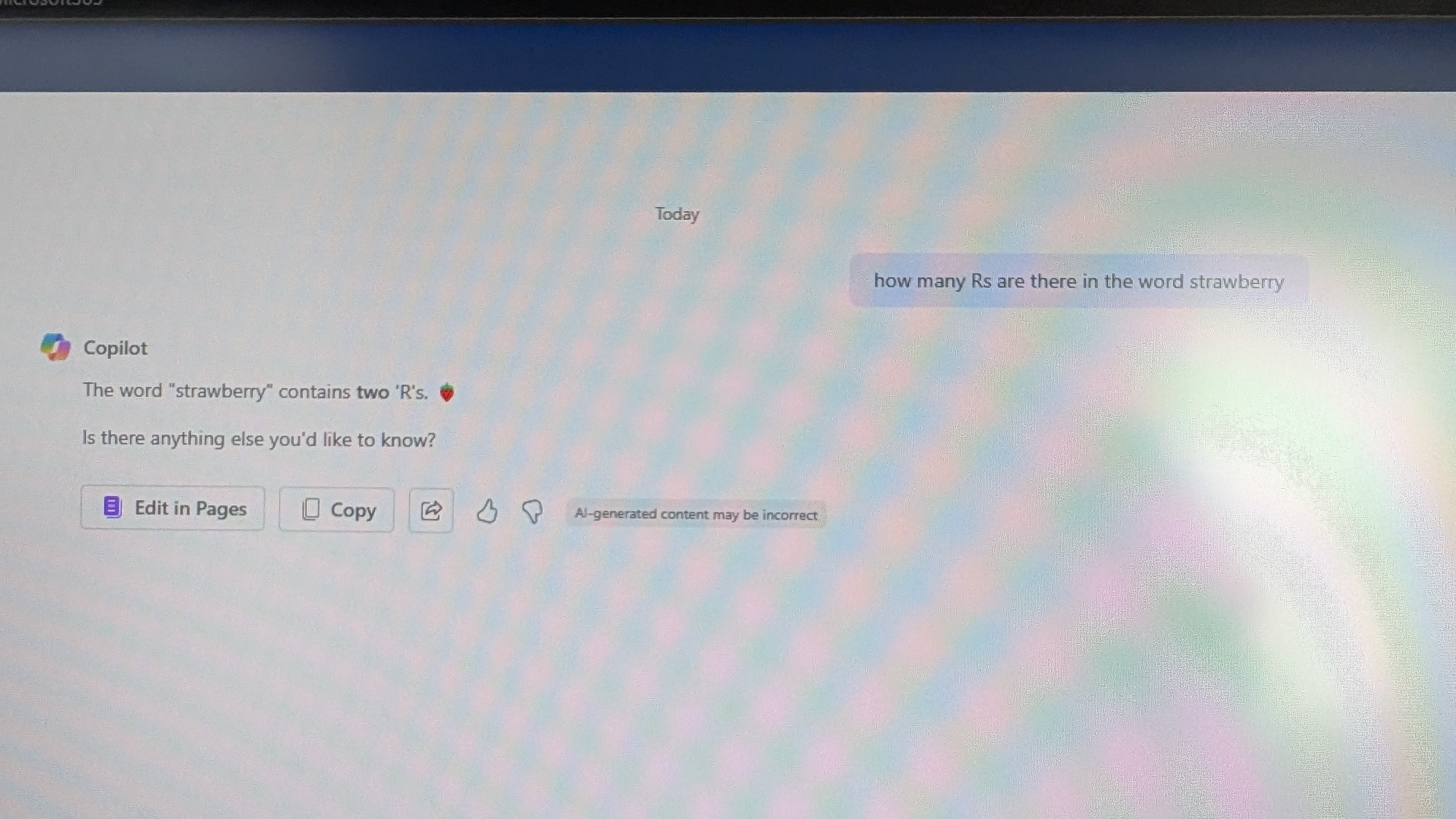

Until you ask them how many R’s are in strawberry.

Think of a person with the most average intelligence and realize that 50% of people are dumber than that.

These people vote. These people think billionaires are their friends and will save them. Gods help us.

The average person doesn’t know what a median is.

This is why i don’t believe in democracy. Humans are too easy to manipulate into voting against their interests.

Even the “intelligent” ones.What’s your preferred system?

I was about to remark how this data backs up the events we’ve been watching unfold in America recently

I’m of the opinion that most people aren’t dumb, but rather most don’t put in the requisite intellectual effort to actually reach accurate or precise or nuanced positions and opinions. Like they have the capacity to do so! They’re humans after all, and us humans can be pretty smart. But a brain accustomed to simply taking the path of least resistance is gonna continue to do so until it is forced(hopefully through their own action) to actually do something harder.

Put succinctly: They can think, yet they don’t.

Then the question is: what is being smart or dumb? If acting dumb in 90% of life while having the capability of being smart isn’t “being dumb” then what is?

If someone who has the capability of being 50/100 intelligent and is always acting 50/100, I would argue they are smarter than someone capable of 80/100 intelligence but acts 20/100 intelligence for 90% of their life.

Broadly speaking, I’d classify “being dumb” as being incurious, uncritical, and unskeptical as a general rule. Put another way: intellectual laziness - more specifically, insisting on intellectual laziness, and particularly, being proud of it.

A person with a lower than normal IQ can be curious, and a person with a higher than normal IQ can be incurious. It’s not so much about raw intelligence as it is about the mindset one holds around knowledge itself, and the eagerness (or lack thereof) with which a person seeks to find the fundamental on topics that they’re presented with.

Basically, although base intelligence/smartness perhaps has two parameters that make it? Effort and speed. Everyone can put in a bit more effort, but base speed may be baked in, unless one trains it, and max reachable base speed will depend from person to person. Hell if I know, we haven’t really created a definitive definition for intelligence yet.

Edit Addendum: As for what can be considered dumb or smart? I agree, lack of effort can be considered “dumb”. Though the word dumb is a bit broad. I guess we can say many people are, out of habit, “intellectually heedless”

LLMs are made to mimic how we speak, and some can even pass the Turing test, so I’m not surprised that people who don’t know better think of these LLMs as conscious in some way or another.

It’s not a necessarily a fault on those people, it’s a fault on how LLMs are purposefully misadvertised to the masses

Considering the amount of people that either voted trump or not voted at all, I’d say that there’s a portion of americans lying.

What that overwhelming, uncritical, capitalist propaganda do…

I know enough people for whom that’s true.

Reminds me of that George Carlin joke: Think of how stupid the average person is, and realize half of them are stupider than that.

So half of people are dumb enough to think autocomplete with a PR team is smarter than they are… or they’re dumb enough to be correct.

or they’re dumb enough to be correct.

That’s a bingo

“Nearly half” of US citizens are right, because about 75% of the US population is functionally or clinically illiterate.

I think the specific is that 40% of adult Americans can’t read at a seventh grade level.

Probably because they stopped teaching etymology in schools, So now many Americans do not know how to break a word down into its subjugate parts.

21% of adults in the US are illiterate in 2024.

54% of adults have a literacy below a 6th-grade level (20% are below 5th-grade level).

https://www.thenationalliteracyinstitute.com/2024-2025literacy-statistics

Specifically it is about 75% of the population being functionally or clinically illiterate as I said. This is more likely caused by the fact that American culture is anti intellectual, and not the lack of being taught etymology, as etymology has little to do with literacy.

Does that even actually help in English lmao

Yes, English is absolutely full of words that can be deciphered from their roots.

I’d be curious, it seems more common in Latin based languages, whereas English seems to be a lot more… Free form?

English is a mish-mash hodgepodge of two dozen other languages, many (most?) of which are Romantic/Latin-based.

There is an etymology word joke that says something along the lines of, “if “pro” is the opposite of “con”, then is the opposite of “congress” “progress”?”

And if you don’t know etymology, then that seems to make sense.

When you break down the word Congress, you get the prefix con and the root word gress, con means with, and gress means step, so it means to step with or to walk with.

The opposite of walking with someone is to walk apart from someone, so, the actual opposite of congress would be digress, and the opposite of progress would be regress.

Etymology is great at ruining jokes, but it’s also great at helping you understand what words mean and why they mean them.

so, the actual opposite of congress would be digress

How about transgress.

The word trans means across, or on the other side, and gress once again would mean step, so to transgress is basically to cross the line, right?

I did a quick search, but there isn’t really a word to describe the people that don’t cross the line.

The opposite of the prefix trans is the prefix cis, which means “on the same side”

Better than entomology, which just bugs me.

According to the Programme for the International Assessment of Adult Competencies, 2013, the median score for the US was “level 2”. 3.9% scored below level 1, and 4.2% were “non-starters”, unable to complete the questionnaire.

For context, here is the difference between level 2 and level 3, from https://en.wikipedia.org/wiki/Programme_for_the_International_Assessment_of_Adult_Competencies#Competence_groups :

- Level 2: (226 points) can integrate two or more pieces of information based on criteria, compare and contrast or reason about information and make low-level inferences

- Level 3: (276 points) can understand and respond appropriately to dense or lengthy texts, including continuous, non-continuous, mixed, or multiple pages.

On average, 79% of U.S. adults nationwide are literate in 2024.

21% of adults in the US are illiterate in 2024.

54% of adults have a literacy below a 6th-grade level (20% are below 5th-grade level).

https://www.thenationalliteracyinstitute.com/2024-2025literacy-statistics

I wouldn’t be surprised if that is true outside the US as well. People that actually (have to) work with the stuff usually quickly learn, that its only good at a few things, but if you just hear about it in the (pop-, non-techie-)media (including YT and such), you might be deceived into thinking Skynet is just a few years away.

Two things can be true at once! Though I suppose it depends on what you define as “a few.”

It’s a one trick pony.

That trick also happens to be a really neat trick that can make people think it’s a swiss army knife instead of a shovel.

It’s like asking if you think a calculator is smarter than you.

„It‘s totally a lot smarter than I am, no way could I deliver (234 * 534)^21 as confidently!“

Are you suggesting my 90’s calculator is smarter than LLM’s?

Hard to compete with that 90s confidence 😎

Nearly half of llm user are dumber than they seem

Only half?

Well, if somebody thinks this, it’s kind of true isn’t it?

For anyone wondering.

I’m starting to think an article referring to LLM as AI is s red flag, while them referring to them as LLM is a green flag.

Always has been

"Half of LLM users " beleive this. Which is not to say that people who understand how flawed LLMs are, or what their actual function is, do not use LLMs and therefore arent i cluded in this statistic?

This is kinda like saying ‘60% of people who pay for their daily horoscope beleive it is an accurate prediction’.You say this like this is wrong.

Think of a question that you would ask an average person and then think of what the LLM would respond with. The vast majority of the time the llm would be more correct than most people.

A good example is the post on here about tax brackets. Far more Republicans didn’t know how tax brackets worked than Democrats. But every mainstream language model would have gotten the answer right.

I bet the LLMs also know who pays tarrifs

Memory isn’t intelligence.

Then asking it a logic question. What question are you asking that the llms are getting wrong and your average person is getting right? How are you proving intelligence here?

How many Rs are there in the word strawberry?

I asked gemini and ChatGPT (the free one) and they both got it right. How many people do you think would get that right if you didn’t write it down in front of them? If Copilot gets it wrong, as per eletes’ post, then the AI success rate is 66%. Ask your average person walking down the street and I don’t think you would do any better. Plus there are a million questions that the LLMs would vastly out perform your average human.

I think you might know some really stupid or perhaps just uneducated people. I would expect 100% of people to know how many Rs there are in “strawberry” without looking at it.

Nevertheless, spelling is memory and memory is not intelligence.

That was a very long time ago, that’s fine now

I know it looks like I’m shitting on LLMs but really just trying to highlight they still have gaps on reasoning that they’ll probably fix in this decade.

LLMs are an autocorrect.

Let’s use a standard definition like “intelligence is the ability to acquire, understand, and use knowledge.”

It can acquire (learn) and use (access, output) data but it lacks the ability to understand it.

This is why we have AI telling people to use glue on pizza or drink bleach.

I suggest you sit down with an AI some time and put a few versions of the Trolley Problem to it. You will likely see what is missing.

I think this all has to do with how you are going to compare and pick a winner in intelligence. the traditional way is usually with questions which llms tend to do quite well at. they have the tendency to hallucinate, but the amount they hallucinate is less than the amount they don’t know in my experience.

The issue is really all about how you measure intelligence. Is it a word problem? A knowledge problem? A logic problem?.. And then the issue is, can the average person get your question correct? A big part of my statement here is at the average person is not very capable of answering those types of questions.

In this day and age of alternate facts and vaccine denial, science denial, and other ways that your average person may try to be intentionally stupid… I put my money on an llm winning the intelligence competition versus the average person. In most cases I think the llm would beat me in 90% of the topics.

So, the question to you, is how do you create this competition? What are the questions you’re going to ask that the average person’s going to get right and the llm will get wrong?